Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

SignEase - Sign Language Interpreter Model An Indian Sign Language Interpretation Model using Machine Learning and Computer Vision Technology

Authors: Purvi Passi, Jessica Pereira, Anjelica Misal, Viven Menezese, Dr. M. Kiruthika

DOI Link: https://doi.org/10.22214/ijraset.2024.60728

Certificate: View Certificate

Abstract

This study introduces a real-time system designed to recognize hand poses and gestures from the Indian Sign Language (ISL) using grid-based (control points) features. The primary aim is to bridge communication barriers between the hearing and speech impaired individuals and the broader society. Existing solutions often struggle with either accuracy or real- time performance, whereas our system excels in both aspects. It can accurately identify hand gestures in Indian Sign Language. In addition to recognition capabilities, our system offers a ’Learning Portal’ for users to efficiently learn and practice ISL, ASL, etc., enhancing its accessibility and effectiveness. Notably, the system operates solely on smartphone camera input, eliminating the need for any external hardware like gloves or specialized sensors, thus ensuring user-friendliness. Key techniques employed include hand detection via MediaPipe, cvzone, etc modules, and grid-based feature extraction, which transforms hand poses into concise feature vectors. These features are then compared with a TensorFlow- provided database for classification for accurate translation.

Introduction

I. INTRODUCTION

The research outlined in this paper focuses on Indian Sign Language (ISL), a vital means of communication for the hearing and speech impaired community. ISL comprises gestures representing complex words and sentences, encompassing 33 hand poses, including digits and letters. Certain letters, such as ’h’ and ’j’, are conveyed through gestures, while ’v’ resembles the digit 2. Understanding ISL gestures can be challenging for many, leading to a communication gap between those familiar with ISL and those who aren’t.

To bridge this gap, a real-time translation solution was developed, utilizing an Android smartphone camera to capture hand poses and gestures, with processing handled by a server. The system aims for fast and accurate recognition, supplemented by a Learning Portal to aid in basic ISL comprehension. The system successfully identifies all 33 ISL hand poses, initially focusing on single-handed gestures but with potential for extension to two-handed gestures. The paper proceeds to discuss related work on sign language translation, followed by an explanation of the techniques employed for frame processing and gesture translation. Experimental results are presented, along with details of the Android application enabling real-time Sign Language Translation. Finally, future directions for ISL translation research are discussed in the concluding section.

A. Background

In a world marked by diversity, effective communication is essential for harmony. With nearly 20 million people in India facing speech disabilities and 466 million globally experienc- ing hearing loss[1], a significant gap exists between sign and spoken language, leading to isolation. This project addresses the issue by developing technology to bridge this gap, aiming to connect and include individuals with hearing challenges, with a vision for a more inclusive future.

B. Motivation

The Sign Language to English Interpreter project is moti- vated by a sincere commitment to promote inclusive commu- nication for sign language users. Its main goal is to empower the deaf community, enabling their seamless integration into the global community. The project seeks to break down com- munication barriers, envisioning a society where individuals, irrespective of their hearing abilities, can learn, access infor- mation, and engage freely. At its core, this initiative is rooted in the belief that technology can foster mutual understanding, paving the way for a more inclusive future.

C. Aim and Objective

The Sign Language to English Interpreter project is focused on developing a reliable system that translates sign language gestures into English text. This system serves as a real- time communication bridge across various domains, including education, healthcare, public services, and daily interactions. The project’s key goals include creating a smart gesture recog- nition system capable of understanding diverse sign language movements, ensuring precision in interpretation by converting recognized gestures into accurate English text, and designing a user-friendly interface to make the technology accessible to everyone, regardless of their background.

D. Scope

The project is aimed at designing and implementing a mo- bile application to facilitate sign language interpretation, bridg- ing communication gaps between individuals who are deaf or hard-of-hearing and those unfamiliar with sign language. The current scope of the model includes interpreting hand gestures into English text, with a future goal to implement text-to-gesture translation.

Moreover, this project can be deployed with the target audience by collaborating with Schools, Hospitals and NGOs. Additionally, the project will encompass the development of a functional prototype for demonstration and evaluation pur- poses. The scope extends to testing the application’s usability and effectiveness in real-world scenarios, ensuring it meets the needs of its target users. Ongoing refinements may be made based on user feedback to enhance the overall performance and user experience of the SignEase app.

II. LITERATURE REVIEWS

The research landscape for improving sign language trans- lation and interpretation is diverse and dynamic, with several notable projects aiming to enhance communication for the deaf and mute community. One such endeavor focuses on refining sign language translation models, particularly in han- dling longer sentences. This initiative demonstrated significant progress, surpassing baseline models by more than 1.5 BLEU- 4 score points, indicating a notable improvement in translation quality[2].

Another critical area of exploration involves the develop- ment of systems translating English text into Indian Sign Language (ISL), aiming to facilitate communication in specific contexts like railway reservation counters[3]. However, the lack of standardized grammar in ISL poses challenges for traditional rule-based translation methods, necessitating inno- vative approaches in system development. Furthermore, there are efforts to create sign language interfaces (SLIs) using vari- ous technological modalities. For instance, one project utilizes video-based methods to recognize American Sign Language (ASL) gestures, aiming to establish online communication platforms for the deaf and mute community. This approach circumvents the limitations of sensor-based techniques, which often require specialized equipment like gloves or sensors.[4] Additionally, mobile applications equipped with elec- tromyography (EMG) sensors are being designed to interpret ASL gestures[5], translating them into written text and spoken English. Conversely, the system can also convert spoken English into text, providing Deaf individuals with versatile communication tools. These initiatives collectively strive to advance sign language translation and interpretation technolo- gies, catering to the unique linguistic and technological needs

of diverse sign language communities.

III. STUDY REVIEW

In the realm of Sign Language Recognition (SLR), the journey begins with data acquisition, a crucial step laying the foundation for model training. Whether sourcing from existing datasets or curating new ones, the quality and relevance of the data significantly impact the subsequent stages. Pre- processing follows, where data refinement is conducted to opti- mize classifier performance by mitigating inaccuracies arising from flawed data. Subsequently, segmentation emerges as a pivotal process, particularly in isolating hand gestures amidst complex backgrounds, thereby facilitating focused analysis.

Feature extraction then ensues, where pertinent information is distilled from the region of interest, shaping the foundation for gesture recognition. Ultimately, classification emerges as the pinnacle, delineating the categorization and identification of sign language expressions into coherent linguistic or semantic classifications, thus culminating the SLR pipeline.

- Data Acquisition – The first step is to collect the relevant data that will be used to train the model. This may involve sourcing data from existing sources available on online platforms like Kaggle, etc. or training a new independent dataset.

- Pre-processing - Pre-processing is the stage where the data is changed after obtained. It’s also to help the classifier to perform better by reducing some bad data that may cause an inaccuracy. A few commonly used pre- processing methods have been compared in Table 1.[6]

TABLE I

PRE-PROCESSING METHOD COMPARISON

|

Method |

Advantage |

Disadvantage |

|

Gaussian Filter |

Reduce noise; Smoothens the image. |

Reduce details. |

|

Median Filter |

Filter out the noise; Preserve sharp fea- tures. |

No Error Propagation. |

|

Image Cropping |

Consistent input. |

- |

|

Bootstrapping |

Avoid loss of infor- mation; Simple im- plementation. |

Time consuming. |

3. Segmentation – For SLR, the most important part is the hand gesture which is acknowledged by the hand movements. Therefore, image segmentation is applied to remove unwanted data like the background and other ob- jects as the input, which might interfere with the classifier calculation. Image segmentation works by limiting the region of the data, so the classifier will only look at the Region of Interest (ROI). Some types of segmentation techniques have been compared in Table 2.[6]

4. Feature Extraction - Feature Extraction is the part where relevant information from the data is taken and enhanced. It cuts out the redundant information from the region of interest and starts taking the features to be used for the classifier calculation. For SLR, those features might vary depending on what the researcher thinks can be taken for recognizing gestures

5. Classification – Classification refers to the process of categorizing or identifying signs, gestures, or movements made in sign language into specific linguistic or semantic categories.

TABLE II

FEATURE EXTRACTION METHOD COMPARISON

|

Method |

Advantage |

Disadvantage |

|

Cross-Correlation Coefficient |

Low computation |

Too simple for classi- fication. |

|

Support Vector Ma- chine |

Memory efficient. Ef- fective for classifica- tion. |

Low performance when noise is in the data |

|

Artificial Neural Net- work |

Able to learn. Ro- bust fault-tolerant net- work. |

Slow convergence speed. |

|

Convolutional Neural Network |

Highly accurate for image classification. Can work well even without segmentation or pre-processing |

High computation. Need strong hardware. |

|

Convolutional Neural Network with Trans- fer Learning |

Highly accurate for image classification. Saves time since it is pre-trained. |

High computation. Need strong hardware. Preprocessing needed to fit the network. |

In essence, Sign Language Recognition embarks on a journey of meticulous data refinement and analysis, commencing with data acquisition and traversing through pre-processing, seg- mentation, feature extraction, and classification. Each phase, intricately intertwined, contributes to the overarching objective of accurately deciphering sign language expressions. Through these systematic stages, the intricate nuances of sign language are translated into meaningful linguistic or semantic cate- gories, fostering enhanced communication and accessibility for the deaf and mute community. As technology continues to evolve, the refinement of these processes stands poised to further empower individuals through improved Sign Language Recognition systems.

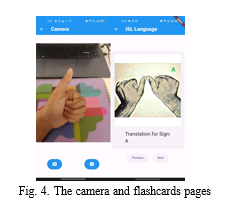

A. Proposed System

The developed system represents a significant advancement in the domain of sign language interpretation, particularly focusing on the Indian Sign Language (ISL). Leveraging TensorFlow, Teachable Machines, and Python, the system has been engineered to create an interpreter model tailored specifically for ISL gestures. Notably, this model distinguishes itself from existing systems through its capability to interpret ISL gestures that involve the coordinated movements of both hands, in contrast to the predominant focus on single-handed gestures, as observed in American Sign Language (ASL) systems.

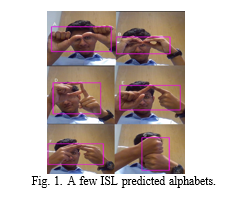

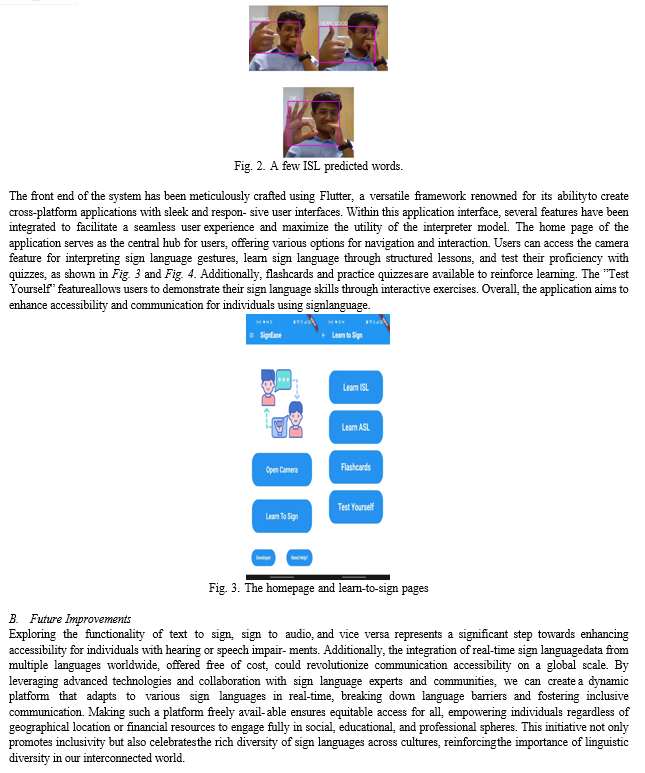

This approach marks a departure from conventional method- ologies by extending the interpretative scope to encompass the intricacies of ISL, thereby enhancing inclusivity and acces- sibility for individuals who rely on this form of communi- cation. By accommodating the complexities inherent in two- handed ISL gestures, the system underscores a commitment to comprehensiveness in sign language interpretation, ensuring a more nuanced and accurate portrayal of users’ expressions and intentions. A few trained and tested ISL hand gestures with accurate interpretation have been shown in Fig. 1 and Fig. 2

for letters and words, respectively.

Conclusion

A. Drawbacks In summary, the significance of sign language interpreters cannot be overstated in facilitating effective communication and equitable access to information for the deaf and hard of hearing community. Despite the strides made in leveraging technological advancements like video relay services and mobile applications, it’s crucial to acknowledge the current limitations stemming from the model’s 80% accuracy rate and the restricted mapping of gestures due to the constraints of the teachable machines utilized during training. Looking ahead, the integration of backend and frontend systems is slated for future enhancement, promising improved functionality and usability. B. Scalibility Exploring text-to-sign, sign-to-audio, and vice versa func- tionalities can significantly enhance accessibility for individ- uals with hearing or speech impairments. Partnering with NGOs, schools, and hospitals enables tailored solutions to address real-world needs. By integrating these technologies, we can empower individuals in education, healthcare, and everyday interactions, fostering a more inclusive society.

References

[1] ”WHO — Deafness and hearing loss”, World Health Organization, accessed January 19, 2022 [2] An Improved Sign Language Translation Model with Explainable, Adaptations for Processing Long Sign Sentences, Jiangbin Zheng , Zheng Zhao, Min Chen, Jing Chen, Chong Wu , Yidong Chen ,Xiaodong Shi, and Yiqi Tong. Department of Artificial Intelligence - School of Informatics, Xiamen University, Xiamen 361005, China, China Mobile (Suzhou) Software Technology Co., LTD, Suzhou 215000, China,,HongKong [3] Domain Bounded English to Indian Sign Language Translation Model, SYED FARAZ ALI, GOURI SANKAR MISHRA, ASHOK KUMAR SAHOO, International Journal of Computer Science and Informatics, [4] Volume 4, Issue 1, Article 6, July 2014 [5] Sign Language Interpreter, Mr. R. Augustian Isaac, S. Sri Gay- athri,International Research Journal of Engineering and Technology (IRJET),e-ISSN: 2395-0056, Volume: 05, Issue: 10, Oct 2018, p-ISSN: 2395-0072 [6] Proceedings of 2018 Eleventh International Conference on Contem- porary Computing (IC3), 2-4 August, 2018, Noida, India, Voicer: a Sign Language Interpreter Application for Deaf People, Hardik Rewari, Vishal Dixit, Dhroov Batra, Hema N Department of Computer Science and Information Technology, JIIT, Noida [7] Systematic Literature Review: American Sign Language Translator, An- dra Ardiansyaha, Brandon Hitoyoshia, Mario Halima, Novita Hanafiahb, Aswin Wibisurya, 5th International Conference on Computer Science and Computational Intelligence 2020, ScienceDirect

Copyright

Copyright © 2024 Purvi Passi, Jessica Pereira, Anjelica Misal, Viven Menezese, Dr. M. Kiruthika. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET60728

Publish Date : 2024-04-21

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online